RPF Process

Refer to Figure 15-5 for this next illustration of

RPF. Here is a simplified routing table based on the perimeter router's

configuration:

199.1.1.0/24 E1 199.1.2.0/24 E0 199.1.3.0/24 E0 199.1.4.0/24 E0 199.1.5.0/24 E0 199.1.6.0/24 E0 199.1.7.0/24 E0 0.0.0.0/0 E1

Figure 15-5. Unicast RPF Example

[View full size image]

As an example, assume that the perimeter router

receives a packet on E0 with an IP address of 199.1.0.5. With RPF, the

router knows that this is not valid because 199.1.0.0/25 is located off

E1. In this instance, the router drops the packet. Basically, the router

compares the source IP address with the routes in the routing table, to

make sure that the packet is received off the correct interface. The

router matches source IP packets only against best paths (the ones

populated in the routing table).

If an inbound ACL is applied to the interface on

which RPF is enabled, the router first checks the ACL and then performs

its RPF check.

NOTE

For RPF to function, CEF must be enabled on the

router. This is because the router uses the Forwarding Information Base

(FIB) of CEF to perform the lookup process, which is built from the

router's routing table. In other words, RPF does not really look at the

router's routing table; instead, it uses the CEF FIB to determine

spoofing.

RPF Usage

RPF works best at the perimeter of your network. If

you use it inside your network, it is used best when your routers have

more specific routes. With route summarization, a spoofing attack could

be in process, and it would be difficult to determine which part of the

summarized route the attack is occurring from. For external threats, the

more ISPs and companies use RPF, the more likely it is that spoofing

attacks can be a thing of the past. However, the more point-of-presence

(POP) connections that an ISP has, the more difficult it becomes to use

RPF because multiple paths might exist to the source. Using RPF as close

to the sources of the addresses as possible is the best solution for

ISPs directly connected to their customers.

RPF is deployed best on perimeter routers in networks

that have a single connection to the outside world. Of course, RPF will

work in multiple-connection environments, as well as with internal

routers, but it might not provide the optimum solution in detecting

spoofed packets. Figure 15-6 shows an example of the problem that can

occur when using RPF in a dual-connection network. In this example, the

perimeter router uses interface S0 to send traffic to the remote site.

However, using BGP, the Internet has determined that the best path to

return the traffic to the network on the left is to send this through S1

on the perimeter router. This creates a problem on the perimeter router

with RPF because using its routing table, the router expects this

traffic to come through S0. In this instance, the router would drop the

returning traffic.

Figure 15-6. RPF and Dual-Connection Problems

[View full size image]

One exception to using RPF for single connections is

to use dialup access on an access server. One of the main sources of

spoofing attacks is dialup access. By using RPF on your access servers,

you can limit your exposure to this method of spoofing attack.

An alternative to RPF is to use ACLs. However, the

main problem of ACLs are their performance and day-to-day maintenance.

RPF, on the other hand, relies on information from the routing table,

which can be built statically or dynamically. With CEF handling the

process, you are not taking a performance hit.

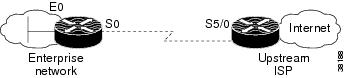

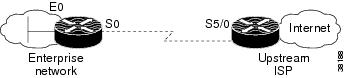

In this example, Unicast RPF

is applied at interface S0 on the enterprise router for protection from

malformed packets arriving from the Internet. Unicast RPF is also

applied at interface S5/0 on the ISP router for protection from

malformed packets arriving from the enterprise network.

Figure 40 Enterprise Network Using Unicast RPF for Ingress Filtering

Using the topography in , a typical configuration (assuming that CEF is turned on) on the ISP router would be as follows:

ip cef

interface loopback 0

description Loopback interface on Gateway Router 2

ip address 192.168.3.1 255.255.255.255

no ip redirects

no ip directed-broadcast

no ip proxy-arp

interface Serial 5/0

description 128K HDLC link to ExampleCorp WT50314E R5-0

bandwidth 128

ip unnumbered loopback 0

ip verify unicast reverse-path

no ip redirects

no ip directed-broadcast

no ip proxy-arp

ip route 192.168.10.0 255.255.252.0 Serial 5/0

The gateway router configuration of the enterprise network (assuming that CEF is turned on) would look similar to the following:

ip cef

interface Ethernet 0

description ExampleCorp LAN

ip address 192.168.10.1 255.255.252.0

no ip redirects

no ip directed-broadcast

no ip proxy-arp

interface Serial 0

description 128K HDLC link to ExampleCorp Internet Inc WT50314E C0

bandwidth 128

ip unnumbered ethernet 0

ip verify unicast reverse-path

no ip redirects

no ip directed-broadcast

no ip proxy-arp

ip route 0.0.0.0 0.0.0.0 Serial 0

Notice that Unicast RPF works with a single default route. There are no

additional routes or routing protocols. Network 192.168.10.0/22 is a

connected network. Hence, packets coming from the Internet with a source

address in the range 192.168.10.0/22 will be dropped by Unicast RPF.